Participation Instructions - Robotics

For detailed instructions, please refer to the task repository in: https://github.com/KTH-RPL/ELSA-Robotics-Challenge

The Robotics Challenge aims to benchmark federated learning approaches for large-scale imitation learning of manipulation skills in robots. To do so we propose a single track competition.

Track 1 - Federated Imitation Learning for Robotic Manipulation

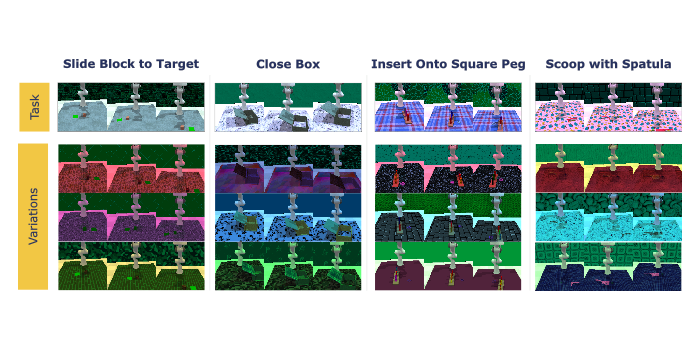

In this track, the teams must use the provided FLAME dataset to train imitation learning policies using federated learning in four manipulation tasks:

- Slide Block to Target: The goal of the robot is to drag an object to a (randomly selected) goal position;

- Close Box: The goal of the agent is to close a box, initialized with a random pose;

- Insert onto Square Peg: A fine-grained manipulation task where the goal of the agent is to place a square peg into a randomly-placed support;

- Scoop with Spatula: A task where the robot must manipulate an external object (spatula) to scoop and object on interest in the scene.

For each of these tasks, we instantiate 420 different environments and 100 demonstrations per environment, of which 400 environments are used for training, 10 for validation, and 10 for testing.

Evaluation Metrics:

For a given task, we will evaluate the participants models using the average RMSE of the predicted actions of their model against the ground-truth expert actions in the test environments. The final ranking will be given by the average RMSE across the four tasks.

General Rules:

- Participants can choose between training individual policies for each task or a generalist policy that accomplishes all tasks. However, the policies must be trained using only the provided dataset. As such, employing generative models for synthetic data augmentation or using additional datasets is strictly forbidden.

- All results must be reproducible. Participants must make models and inference code accessible to the organizers.

- Submission of the results for a single tasks is not possible. Participants must submit the results for all tasks.

For more information regarding the competition, please contact miguelsv@kth.se

Challenge News

Important Dates

2025-10-01: Submission server open

2025-12-31: Submission deadline

All deadlines 23:59 CEST